Roundup #20: Why would AI take jobs we could have already automated for 10+ years? Streaming TV, and AI-2027

A quick roundup of some stuff on my mind

Yesterday I picked up a rental car in Australia. There was a tired and annoyed clerk at the counter handling Hertz, Thrifty and one more company (something with Dollar). He manually typed some data from us into his PC, explained a bunch of things and gave us a key. The technology to automate this entire progress in a cost-effective way has existed for about 12 years (kind of needs cloud and smartphones to work well). But we haven’t done so. No amount of AI is going to suddenly automate that job. A good reality check when it comes to the crazy doom stories about AGI.

A great post about Streaming TV.

The basic case is that all TV shows hit a wall where they become just ‘cliffhanger slop’, focusing only on the objective to keep you watching. The entire incentive structure of streaming is built around the need to take as much of your time as possible.

Insightful part of the article:

he specific problem I have with new (or new-ish) streaming TV shows is that they often ask for a double-digit hour time commitment to receive a pay-off (and those pay-offs either don’t come or just straight up suck).

When someone tells you “seriously bro, you HAAAVVEEE to watch [new narrative TV streaming show X]”, they’re about to tie up 20 hours of your life.

Other TV formats are much easier to start and stop. Cooking shows. Home-hunting shows. Dating shows. 1990s sitcom shows.

Same with film. A 2-hour watch and you’re done.

What about social apps like X or Instagram or TikTok? These are definitely a time-suck — and the media equivalent of Doritos — but seems we all know they are a time suck and often respond to the “time sucki-ness”. When I’m deep in the doom-scroll, my brain says “wow, I’m fried and need to take a break” (at a minimum, I feel awful after).

The piece gives the great advice not to watch any show until it’s fully complete so you can make an accurate assessment of whether it’s going to be worth it.

AI 2027: The viral piece of future projection

First of all, I do think this is worth a read. It is a very well made website that provides an interesting potential scenario for the AI future. In the scenario, ‘AGI’ arrives in 2027. The article especially does a very good job in explaining the issue of alignment (what might AI’s objectives be, and how might we control them?). However, the article could do better explaining and unpacking some of it’s core premises.

First of all, the article presumes that LLMs are the key breakthrough on the path to AGI. As a daily user of the best models and agents, I don’t believe this is self-evident at all. In fact I think there is a pretty high chance that all these agents will get stuck at something like 90% human ability for a few decades. Coding agents are still really quite bad, and general purpose agents like Manus are worse.

Iron-manning this point: One could argue that the problems with agents are almost all context problems. The article actually implicitly reasons that when it has ‘always-on’ agents that are constantly running as the key step towards AGI. The idea is a chain of thought constantly cycling recursively through the model, at the maximum context length. This is compelling. I do believe that idea will create a much more capable agent, and I am quite confident this is already being done internally at the top labs. Imagine a human ‘LLM’, who would only be switched ‘on’ briefly to enter a specific question with very limited information. It would be very hard to do well on any task. That is how LLMs operate now.

Secondly, the article presumes that OpenBrain (OpenAI basically) will keep its lead, and that this translates into ever larger profits. The writers still presume that there is a winner-take-most dynamic in the model layer. It seems increasingly unlikely to me that this is the case. The model layer looks more likely to become commoditized with smart models being everywhere, cheap, and ultimately it hardly making any difference whether you use OpenAI, Anthropic, Google, Deepseek, or the many other models that (already) exist.

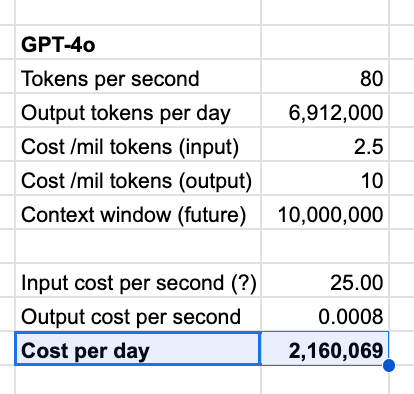

Iron-manning this point: If you assume that the previous assumption is true, that the agent needs to be continuously thinking out-loud and looping that train of thought through the model, capital will continue to be a moat. It will be very expensive to run such an agent, as much as $2 million per day.

In that case, it could be true that there is a winner-take all dynamic driven by capital as a moat, where only the biggest can raise enough money to run true AGI agents. But still, even then, that would be temporary as compute cost keeps coming down (unless that is somehow monopolized?)

Anyway, it’s easy to go deep down this rabbit hole. I think it is very difficult to predict what will happen. The writers of AI 2027 are credible, and they did a great job writing this scenario, but as all forecasts it is much more likely to be wrong than correct.