Roundup #29: The demo-to-product gap, AI in healthcare, Scam Inc, Hooligan business cards, and being -pilled

A decade to AGI, Real but modest gains from AI, Scam empires scaling faster than AI, Saudi Arabia's sort-of liberalization, Hooligan business cards, and being -pilled

What caught my eye? Quite a lot actually. I’ve put big old H1 headers below to separate the AI Stuff from the Non-AI Stuff. We got mixed results from Gen AI pilots, Karpathy’s bombshell interview where he said AGI is a decade away, but also Pig Butchering scams and Saudi Arabia’s cultural change. Let’s dive in!

AI Stuff

Andrej Karpathy says AGI is a decade away

We have to start with the interview that’s getting all the air-time right now, Dwarkesh interview with Andrej Karpathy. Why it matters, in two sentences:

Karpathy is one of the world’s leading innovators in AI, a co-founder of OpenAI, and leader of Tesla’s self-driving tech from 2017 until 2022 (arguably it started working during that period).

His interview was nuanced, authentic, but also filled with zingers that line-up with the current vibe: that AI isn’t ‘there’ yet and probably won’t be for a while.

The LinkedIn clickbait pretty much writes itself. So what did he really say?

Agents Don’t Work Yet:

“Currently, of course they can’t [replace employees]. What would it take for them to be able to do that? Why don’t you do it today? The reason you don’t do it today is because they just don’t work. They don’t have enough intelligence, they’re not multimodal enough, they can’t do computer use and all this stuff.”

The Cognitive Gaps:

“They don’t have continual learning. You can’t just tell them something and they’ll remember it. They’re cognitively lacking and it’s just not working. It will take about a decade to work through all of those issues.”

In Andrej’s view, the problems are solvable but AI tried to run before it could walk multiple times (Clippy, reinforcement learning, early computer agents). His decade estimate comes from hard-won experience watching the field repeat this mistake.

However, the current moment is different because with LLMs, we finally have a form of understanding and a form of reasoning that earlier agent attempts lacked. But we still need multimodality, continual learning, and actual reliability to get to employee-level AI.

So: He does feel that LLMs are in fact a key unlock in working towards useful AI agents, but that there are still a lot of problems. In his experience from research, each of those problems is likely going to take years to solve, and hence adding them up, “If I just average it out, it just feels like a decade to me.” I want to highlight two more short passages from Andrej’s answers that I felt were very insightful.

First, on self-driving cars. He talks about getting a perfect Waymo ride in 2014, and coins a great term very applicable outside of self-driving: demo-to-product gap.

Fast forward. When I was joining Tesla, I had a very early demo of Waymo. It basically gave me a perfect drive in 2014 or something like that, so a perfect Waymo drive a decade ago. It took us around Palo Alto and so on because I had a friend who worked there. I thought it was very close and then it still took a long time.

For some kinds of tasks and jobs and so on, there’s a very large demo-to-product gap where the demo is very easy, but the product is very hard. It’s especially the case in cases like self-driving where the cost of failure is too high. Many industries, tasks, and jobs maybe don’t have that property, but when you do have that property, that definitely increases the timelines.

Lastly, some words from Andrej here on the AI Bubble.

Yeah. There’s historical precedent [with railroads]. Or was it with the telecommunication industry? Pre-paving the internet that only came a decade later and creating a whole bubble in the telecommunications industry in the late ‘90s.

I understand I’m sounding very pessimistic here. I’m actually optimistic. I think this will work. I think it’s tractable. I’m only sounding pessimistic because when I go on my Twitter timeline, I see all this stuff that makes no sense to me. There’s a lot of reasons for why that exists. A lot of it is honestly just fundraising. It’s just incentive structures. A lot of it may be fundraising. A lot of it is just attention, converting attention to money on the internet, stuff like that. There’s a lot of that going on, and I’m only reacting to that.

But I’m still overall very bullish on technology. We’re going to work through all this stuff. There’s been a rapid amount of progress. I don’t know that there’s overbuilding. I think we’re going to be able to gobble up what, in my understanding, is being built. For example, Claude Code or OpenAI Codex and stuff like that didn’t even exist a year ago. Is that right? This is a miraculous technology that didn’t exist. There’s going to be a huge amount of demand, as we see the demand in ChatGPT already and so on.

So I don’t know that there’s overbuilding. I’m just reacting to some of the very fast timelines that people continue to say incorrectly. I’ve heard many, many times over the course of my 15 years in AI where very reputable people keep getting this wrong all the time. I want this to be properly calibrated, and some of this also has geopolitical ramifications and things like that with some of these questions. I don’t want people to make mistakes in that sphere of things. I do want us to be grounded in the reality of what technology is and isn’t.

I really like this guy! He is in the middle. Transformational technology, but still just normal technology. Refreshing rhetoric from one of the key insiders.

The turning of the tide on LLMs, and maybe AI

It is remarkable how the tide is turning, all at once, after GPT-5. The opinion that LLMs might cap out seems to have been quite common. Richard Sutton said it in March 2025 (which is still super recent!), Karpathy and others have generally avoided being either super hyped or overly skeptical. Dwarkesh has asked probing questions why LLMs are not doing any real innovation. But the only person who can really say “I told you so”, and is loudly, and by now somewhat obnoxiously doing that every day, is Gary Marcus (we know Marcus, you were right all along). But now that this point of view is ‘safe’, the floodgates are open.

Having considered it all, I think the shortest and simplest summary of where we stand on AGI is something like this:

LLMs are good at language, and are surprisingly, remarkably good at deciding when to call APIs (function calling). We can do a lot of cool stuff with that.

But they clearly lack understanding. Whatever it is they lack in more specific terms, or why they lack it from a technical standpoint, we don’t know, but they just don’t know what they’re doing.

For a while, it seemed like by just making them bigger, true understanding would ‘emerge’ in LLMs. But that didn’t happen.

Probably, they fundamentally lack understanding because all they know is language, and language is only a small part of animal intelligence.

A squirrel cannot ‘reason’, and it cannot solve math problems, yet it is smarter than an LLM in lots of important ways. It can plan over long time-horizons, it learns from experience, it can decide where the safest place is to build a nest, etcetera.

We still have no idea how a squirrel works, and we probably need to figure that out before we can have AGI.

Mini side quest: Why N8N is cool

It turns out that Andrej Karpathy has a blog: https://karpathy.bearblog.dev/blog/

But updates are only available as an RSS Feed. So I asked AI, in this case the sidepanel of my browser (proving that AI chatbots will be everywhere), how I can subscribe to an RSS Feed. It told me I need an RSS Reader. I don’t want to get an RSS reader, I read newsletters in my email. So I then asked if I can’t just use N8N or something to subscribe and forward new posts to my email. The answer is yes, and it was working 5 minutes later. Neat!

Generative AI pilots are in fact generating returns

A new study that looks pretty good, methodology wise, found material gains driven by AI in eCommerce. Warning, this is a bit dry:

Among the five processes with detailed data, gains in sales range from no detectable effect in the advertising workflows to improvements of up to 16.3% in the Pre-Sale Chatbot application, consistent with prior research on GenAI’s impact on individual tasks in lab settings (see, e.g., Peng et al., 2023). In the Search Query Refinement and Product Description workflows, the effects are smaller—generally 2-3%—yet still substantial for a platform of this scale and maturity. Back-of-the-envelope calculations based on the four deployments with detailed transaction data and positive effects—annualizing workflow-specific gains and assuming linear additivity—suggest that these GenAI applications generate an annual incremental value of approximately $4.6–$5 per consumer. These effects represent roughly 5.5–6% of the increase in per-user revenue observed in global e-commerce between 2023 and 2024.

And also notable:

Taken together, these results show that GenAI generates sizable gains in targeted workflows and meaningful effects for a large and mature retailer, with further potential as adoption broadens and increasingly targets revenue-critical processes. For instance, while in 2023 the platform applied GenAI to only a handful of workflows, by 2024 it had expanded to more than 40 applications and by 2025 to over 60. At the same time, API calls to large language models increased twentyfold between 2024 and 2025, reflecting the rapid scaling of GenAI adoption across the platform.

This sounds like reality. A rollout of a new technology that offers real new capabilities in places where the process is text-in → text-out.

AI in Medicine, by a GP

If you don’t yet subscribe to

’s newsletter , I will again recommend that you do so immediately. He is the best writer on health topics on Substack. He is also actually an active clinician who sees patients every day. Something most influencers cannot say. I have learned so, so much from his pieces on Ozempic, cancer, exercise, supplements, and other topics. Now, he wrote a piece about the deployment of Gen AI in medicine over the past 2 years.The piece eerily mirrors what we read, hear and experience about AI in every other domain: The demos are cool, but the tech doesn’t work that well in practice. Katz also explains very clearly something else that is extra important in medicine compared to, say, marketing.

In medicine, proving something works means running clinical trials that show it improves real outcomes like mortality, quality of life, and hospital admissions.

It’s nowhere near good enough to say that “the AI can detect this thing” if you can’t also prove that “detecting this thing with AI and acting on it makes patients better off.”

Those trials take years and cost tens or hundreds of millions of dollars.

This is a somewhat nuanced point, and he explains this in a lot more detail in the piece. Demo-to-product gap.

What surprised me a bit was that even AI Notetakers (Scribes), which I thought would be a no-brainer improvement, are kind of ‘meh’.

In other words, AI scribes don’t help with the most annoying parts of being a doctor. They just help with drafting the narrative note, one of the least time-consuming parts of patient care.

My experience with these tools was middling. It saved a small amount of time, but introduced new problems:

Every new tool adds lag to the system (that 0.8-second delay between screens compounds)

You have to change how you communicate during visits to document properly

AI slop comes for the medical record because AI-generated notes contain too much irrelevant information

Interesting stuff.

Based on this example in medicine and the previous one in eCommerce, and generally the tone of my AI writing, you might almost think that I’m cherry-picking examples that are neither here nor there, in the messy middle. But I don’t think that’s true. All I’m doing is collecting real examples, written by people who have no other motive or incentive than to simply get this shit to work. This is the reality of AI so far. There are real, meaningful results like the eCommerce one, but it’s not magic, and hard human engineering work is needed to create them. Cue Steven Sinofsky (the legendary Microsoft engineer who led development of Office and Windows 7 among many other things).

Non-AI Stuff

Countries are starting to crack down on ‘pig butchering’ scams

I recently learned that scams are now the largest revenue source for global organized crime. This podcast series from Sue-Lin Wong at the Economist blew my mind. According to the podcast, an estimated half a trillion dollars ($500Bn) is being made in this business, which mostly ladders up to Chinese organized crime (i.e. the Triads).

Countries with weak governments and lots of corruption have had their states captured to a degree not seen since the Narco States in the 80s and 90s. The scam operations are run from entire office complexes, operating almost completely in the open. These operations regularly kidnap people and force them to run straight-up phishing scams, love scams, or the ‘pig butchering’ variety. Pig Butchering, is a type of scam where scammers build a relationship with the victim over weeks and months. These relationships are not necessarily romantic, though they often are. Then, the scammers start getting the victim into some kind of investment scheme. Ultimately, once they are in too deep, the scammers take the victims for everything.

In just one crazy example: This bank CEO in rural USA lost $47 million of the bank’s and his church’s money. He landed in jail. It’s very common for countries and people to blame victims in these types of scams. To be honest, I also find it difficult not to put at least some of the blame on victim. But on the other hand, these scammers are PRO. They practice hard every day to engineer the right scenarios and push the right buttons to make as much money as possible. Every victim thinks they’d never fall for it, until they do. Having been primed by the podcast and my work busting fraud at Carousell, fraud stuff is catching my eye more lately. Here are some scam related things I found notable:

Korea has arrested ~60 Korean nationals implicated with scam operations in Cambodia. They were also kidnapping young Koreans after luring them to Cambodia with promises of a job.

The FBI seized $15Bn in Crypto in the largest bust so far. The company implicated, called Prince Group, apparently owns many different assets including a company that was working on a luxury resort on Palau. The crackdown on Prince Group is thorough. The UK is in, as are the governments of Palau, and to some degree Cambodia. As always in Southeast Asia, money is flowing through Singapore and Hong Kong, so those governments are likely to get in on the action too. This looks like a big bust in progress, which will make it more expensive and harder to operate for these operations.

I watched The Beekeeper, a Jason Statham movie where he goes on a rampage after his elderly landlady gets scammed. This is a classic Jason Statham vigilante flick, with lots of over the top and unnecessary violence, albeit for the good of society. I love Statham movies. Will not pass up the probably unique opportunity to recommend a ‘bad’ Statham movie on my Substack.

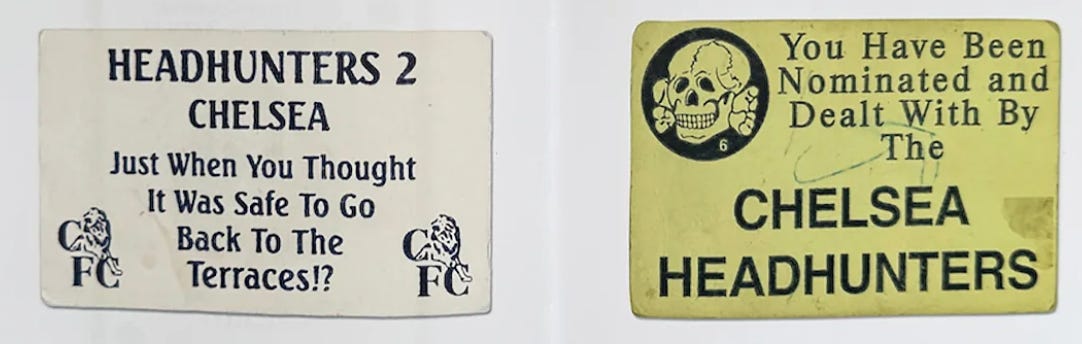

Hooligan business cards

Apparently, UK Hooligan ‘firms’ used to carry business cards. As you might expect they’re quite silly.

Somebody has collected them and created a book.

Cultural change in Saudi Arabia

When the Saudi Comedy festival happened I came across a headline that Dave Chapelle had said it was ‘easier to talk’ in Saudi Arabia than it is in the US. I scrolled past it. The Saudi’s have a lot of money and these comedians need to eat, right? But getting past that cynical instinct, it is somewhat notable that Chapelle, but also Jimmy Carr and Louis C.K. perform stand-up comedy in Riyadh.

So I was happy to click on this article by an Atlantic journalist who went to the festival, and this shorter accompanying taster. It turns out that in its bid to diversify away from oil, Saudi Arabia is actually changing its culture. It’s a lot more free than it used to be, something I had vaguely registered but hadn’t really landed. Of course, it’s still authoritarian. I found this section of the taster piece does a good job summing up the vibe.

Saudi Arabia used to be notorious for the restrictions it placed on women: they couldn’t drive or walk around with their heads uncovered, and needed a husband or male relative to give them permission to work or travel. Today, it’s far from a feminist paradise, but far more women go to work—most of the border staff I saw at the airport were efficient women in niqabs, and we travelled in a Family carriage of the Riyadh subway alongside dozens of professional women, watching videos on their phones. (Another surprise: I didn’t run into any restrictions on the English-language internet, and was able to read The Guardian, The Atlantic and Daily Mail on my burner phone just fine. When Jimmy Carr did a joke about OnlyFans, the audience in Riyadh laughed.)

At the same time, one of the most prominent campaigners for women drivers, Loujain al-Hathloul, was locked up around the time that women got that right: a very clear message to would-be activists that one man has been put in charge of Saudi’s Struggling Feminist Movement, thank you very much. In 2024, a women’s rights campaigner who took literally MBS’s relaxation of modesty laws was convicted of “terrorism” offences.

The Atlantic article is certainly worth a read!

AI Bro language: being -pilled and cracked

I’m sure you’re aware of the use of “-pilled” to basically mean “something that aligns with doctrine” or “something you’re into”. It started with the Matrix, where the blue pill takes you back to sleep and the red pill allows you to escape. That was adopted by fringe online sub-cultures like the anti-feminist Red Pill subreddit. The misogyny in that former reddit community was also what gave the term mainstream exposure. Originally there were just colors:

Red-pilled - Political/social awakening, originally enlightenment but now often associated with far-right views

Blue-pilled - Remaining ignorant/accepting mainstream narratives

Black-pilled - Nihilistic, defeatist outlook (associated with incels and doomers)

Apparently, a trend started in the early 2020s to use “pilled” in the way it’s currently used.

A 2021 Atlantic article documented people claiming to be “pilled” on random things like Rihanna’s makeup line, coziness as a concept, cats sitting in gardens, Kansas, red sauce, and Hank Williams Substack. The article noted that “-pilled” had become “an appropriate substitute for simply ‘suddenly becoming really into something.’” Source

In the Andrej Karpathy interview, he speaks for a bit about things in AI Research being “Bitter-lesson-pilled”1. Substack is full of people being -pilled or claiming they, or someone else are -pilled in some way. The people who use this word are, more than anything else, online too much.

Now, this could be one in the category I’m turning 40 and get annoyed by slang I didn’t grow up with. Or maybe it actually is annoyingly hyper-American and very Twitter. Personally, I cannot recall ever hearing anyone use this in real-life, but that will undoubtedly be different in San Francisco. But in any case, I find it kind of dumb sounding.

This got me thinking about current online slang and AI jargon more generally. Being -pilled is not at all limited to tech, but the people who are saying things like bitter-lesson-pilled, speak in new riddles. This is basically the ‘Corporate Bullshit’ of AI talk. Some examples for your AI Bro Bingo card2:

Priors: What are your priors on that? My priors are… We have good priors. It means starting assumptions. Prior expectations. Comes from data science. Lex Fridman uses this ALL THE TIME.

Test-time: AI models are trained first, and then they are used. Anything that happens when they are actually being used is said to happen “at test-time”. Memory tools become ‘learning at test time’. Karpathy said animals “have to figure it out at test time when they get born.” This one comes from Machine Learning.

Agentic: This is not in the dictionary. But it’s fine. Everyone kind of knows what it means, but not quite. Nobody can define what an agent is. Tool calls in a loop? Something that adds value autonomously? By that logic a Roomba is an agent. It probably is? Why not?

Vibe- : Karpathy actually COINED the term vibe-coding. I see vibe-marketing, vibe-working, etcetera. I liked it from day one.

Cracked: “That model is cracked” or “she’s cracked at prompting.” Means exceptionally good/powerful. Borrowed from gaming culture. Applied to both AI models and the people who work with them. Frowned upon to call yourself ‘cracked’.

Grokking: Instead of saying you understand something, AI adjacent people now “grok” it. This one’s older, but has gone mainstream because an engineer at OpenAI accidentally let an AI model train for way longer than planned in 2022, which turned out, to everyone’s surprise, to unlock the sort of ‘understanding’ that enabled ChatGPT and that we’re now used to from LLMs.

Bonus round of internet slang, this one is not really Tech/AI oriented. But it IS important and new.

Based: “That’s based” or “based take.” A term of approval for something that is controversial in a certain evil/vice-signalling way. Originally from rapper Lil B3, then 4chan, now everywhere in tech Twitter and among the MAGA right. If you haven’t read Richard Hanania’s Based Ritual post, must-read.

The “Bitter Lesson” is a famous idea from Richard Sutton, who also has a Dwarkesh episode, that all progress in AI stems from throwing more data and more computing power at the problem. The bitter lesson itself being that time and time again, people working on AI have discovered that simple scale trumps all methodology and systems design.

Don’t worry, I realize I am an AI bro and tech bro, and even a finance bro. Guilty as charged. Although I do try to limit jargon and I have a history of poking fun at bullshitty language.

This, I find is one of those fantastic absurdities of the world: A Bernie Sanders voting, woke, black rapper literally calling himself the BasedGod, coins a term which is now used by young Hitler loving Republicans to high-five each other for wanting to repeal female voting rights. 🤦

Love this perspective! Karpathy's insights on current AI are spot on. Agents simply don't have the intelligence or continual learning needed yet. It's a crucial reality check, as you pointed out. This interview definetely deserved all the air-time.