Running multiple AI Agents in parallel reminds me of working in Myanmar

How I caught up with Agentic Coding practices and now run multiple Agents in parallel. How we have reached peak-scaffolding for AI. This is one of the more technical ones.

My feeling that my content inspiration was drying up might have been a false alarm driven by holiday boozing and not working. I also felt awfully behind on AI, given that I only understood less than half the stuff that was coming across my screen. Ralph Loops. Beads. Gas Town. People running 20-30 agents in parallel. It felt like I had gotten off the train somehow and without hope of catching up again.

But then I saw a post from Boris Cherny (one of the creators of Claude Code) on how he uses Claude Code. Boris seems to be a legit engineer who ships features to Claude Code, a product I use daily, that is highly innovative, and has very few bugs. His opening shot is that: “My setup might be surprisingly vanilla! Claude Code works great out of the box, so I personally don't customize it much.”

Me like simple, hate complex. Also, I figured I might have a chance to understand simple. So I committed to trying out, and understanding(!) everything Boris posted. That also meant pulling the trigger and going all-in on Claude. I cancelled ChatGPT and Cursor, and got the $150 per month 🤯 Claude Max plan. Like an expensive gym membership, paying incentivizes use. An immediate positive effect has been that I actually didn’t realize how much ‘rate limit anxiety’ I had, which was keeping me from using AI as much as I wanted. With Max, that’s gone.

I’m now running 1-3 Claudes (and Geminis) at the same time. I’m back in the swing of it. Boris claims he runs 10-15 in parallel. About all this, I have thoughts. Hence, content for Substack.

Working with multiple agents is….intense

When I was working in Myanmar, we had 6Mbps of patchy internet for 30 people. That is slow. During a work day, the internet would drop out regularly, and websites sometimes loaded like they would in the 90s, slowly from top to bottom. I got pretty good at the Google Chrome T-Rex running game during those days. But I also acquired a habit of multi-tasking like a maniac.

Open a google doc. Send emails while it’s loading. Open another Google doc. Go back to Doc 1 and perform some actions, set it off to load. Go to Doc 2 and do some stuff. While it loads, go to Skype and send messages. Talk to an employee. Back to Doc 1. Open Doc 3, etcetera. If that seems exhausting, it is.

Working with multiple AI agents feels exactly the same. These things are not like employees where you give them a couple of long-term goals and then have a weekly one-on-one. These things run for 2 minutes to an hour and then stop to wait for instructions. They also ask for permission to do things often while they work (work-arounds exist). That means you need to keep the state of every agent in your head, and the constant context-switching to continue prompting whichever one needs attention is brutal.

On the other hand, I can almost sort-of work with only prompting now. Gemini is connected to all my work stuff, checking my email and drive for updates. It can read and edit docs. I used it to collate and compare information on a project across 4 different google docs, give me a rundown of inconsistencies and write (great) feedback to the team on goal setting. It was also able to write goals to a google sheet, including metrics. I’m still connecting it to more stuff.

Claude is now making my meal plan, creating a shopping list, putting groceries into my shopping cart. It also helped me choose a Curcumin supplement and checked it out online using my saved details. At the same time, Claude Code is constantly working on Magicdoor in the background. It’s working on creating an autonomous marketing agent running on Claude Code, which (using browser plugin and APIs) should go and post on Reddit, Twitter, LinkedIn, etcetera. If I post on Substack, an agent automatically creates the linked twin of that post on my personal website. It’s all pretty awesome, with one caveat: All of this is seriously increasing my output. To what degree that output is ‘slop’ is an open question. At least so far, a lot of it is pretty good and I actually use it.

But I also dream about prompts and terminal screens, and I’m not making any more money or spending more time on the beach just yet.

The discourse online made it seem way more complicated than it is

Here is what I learned when I looked under the hood of multi-agent stuff. Somewhat unsurprisingly, all those multi-agent/agent-swarm/whatever things are still LLMs, and hence the whole thing revolves around three key things: Context, Tools, and Tests. The modern way to manage context (what the AI knows) is by using written plans in files, that AI writes/edits, that are independent of any chat session. I think this is probably a big leap for people who have really never done any coding with AI. Let me explain a bit more.

Context: Claude Code runs inside a specific folder on your own computer. It has access to this folder, where it can search, read, create, and edit all kinds of files. So inside that folder, you let Claude create a file called Claude.md (md stands for markdown, which is ultimately just a text file). That file contains the instructions prompt for Claude with details about the project. Now that you have stored the prompt separately from the chat itself, you can very easily run multiple chats on the same project in parallel. Even better, you can have multiple files with project context and progress reports. These files are how you work together with AI, similar to how you might have a shared doc with an employee to keep track of projects.

Tools are how AI interacts with the world. Reading files, creating files, searching the web, running code, pushing to GitHub, reading email. A tool is just a set of instructions on how to make a certain API call and what to do with the results. Connectors, MCP servers, and Skills are nothing but various ways of standardizing and protocolizing tool calls. Without tools, there is no agent, there is just chat.

Tests: The only way for an LLM to know if it has successfully completed a task, is if it has a way to check the result. The key reason LLMs are so useful for coding, is that code is testable. At its most basic, it either runs (compiles), or not. If it runs, it can be tested programatically. There are even ways for the LLM to use a browser to try out an app and verify if it looks as expected.

The complicated things with exotic names that people now talk about are nothing but creative and sometimes extreme ways of building scaffolding for LLMs, with the goals to make them run for longer, with less human intervention, on harder tasks.

The recent vibe coding discourse has been about two people(?): Ralph Wiggum, and Steve Yegge.

The Ralph Loop is just Claude Code in a loop

(deep breath in)…If you take the “Test” concept above, and then if an AI Agent’s work fails a test, you allow the AI to write a note to a file (Context) about how to avoid failing a test in the future, and then automatically start it off again for another run, and then repeat this as long as necessary until a list of tasks is completed, where ‘completed’ is defined by it passing the tests, (deep breath out) you have what for some reason is now called a Ralph Loop.

while :; do cat PROMPT.md | claude-code ; doneThat just means: Until prompt.md is ‘done’, repeatedly run Claude Code with prompt.md as the input.

In practice, Ralph Loop implementations are credited for two major successes so far. Some folks at a Y-Combinator Hackathon shipped a lot that almost worked while they were sleeping, and a software agency delivered a US$50k project at a cost of $297.

Beads and Gastown appear to be a mess

But what if you could run an entire village of Ralphs at the same time? Now we are ready to look at the work of a programmer called Steve Yegge. This quote from him sums up his mentality:

The agents must never rest.

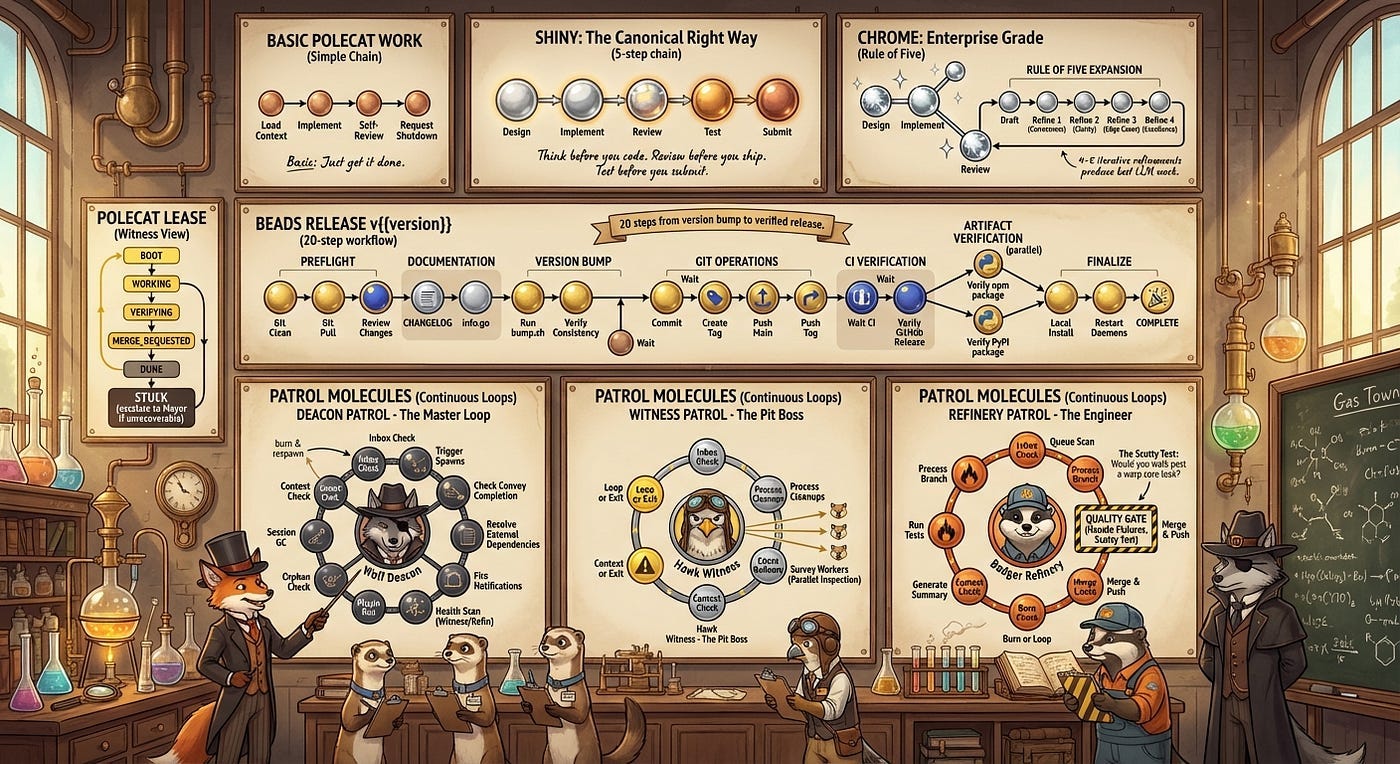

Steve came up with Beads, a memory system for coding agents. It is designed to keep agents on track, and ensure they don’t forget any steps. The problems he identified are definitely real. But conceptually this is just advanced context engineering (all AI memory is just context engineering). He followed it up with something called Gas Town, an even more complicated system meant to run in his words “20-30 agents at the same time, productively”. With an ultra-complex system of ‘majors’ and ‘polecats’ (don’t even worry about it), and three different loops on different levels, some kind of agentic coding monstrosity unfolds.

Unfortunately, it has some issues. It is monstrously difficult to understand, it doesn’t really work, and Beads (which is integral to Gas Town) apparently has virus-like behavior. After some massive hype, it seems most people now hate it.

Steve himself also writes: “Work in Gas Town can be chaotic and sloppy, which is how it got its name. Some bugs get fixed 2 or 3 times. Other fixes get lost. Designs go missing. It doesn’t matter, because you are churning forward relentlessly.”And he has had to apologize: “Apologies to everyone using Gas Town who is being bitten by the murderous rampaging Deacon who is spree-killing all the other workers while on his patrol.” One Medium piece described Gas Town as watching “a man who has seen the face of God and it drove him insane.” A similar reaction:

Twitter/X - @realshcallaway: “This Gas Town project is insane. Steve Yegge has clearly been thinking about agentic coding workflows obsessively for months now and might be losing his mind in the process.”

Apparently, Steve vibe-coded the whole thing. This is a good, balanced summary of the online drama. I just want to say that I agree the whole thing sounds like Steve has completely lost his marbles, but I also find his writing funny and refreshingly honest, for example:

We covered a lot of theory, and it was especially difficult theory because it’s a bunch of bullshit I pulled out of my arse over the past 3 weeks, and I named it after badgers and stuff. But it has a kind of elegant consistency and coherence to it. Workflow orchestration based on little yellow sticky notes in a Git data plane, acting as graph nodes in a sea of connected work.

Yuck! Nobody cares, I know. You want to get shit done, superhumanly fast, gated only by your token-slurping velocity. Let’s talk about how.

Also, it has to be said: What kind of maniac manages to ship over 250,000 lines of working Golang code in a few weeks? Even if it’s crappy, it is a mind boggling feat of productivity. And in that way, it is almost the perfect meta-commentary on vibe coding and Generative AI itself. Also, and I promise this is the last thing I have to say about Beads/Gas Town, somehow there is a token about this on Solana1?

In any case, some people describe Gas Town as “Kubernetes for AI”. Over the years I have read the wikipedia page of Kubernetes multiple times and I still don’t understand it. So, I have to admit there is a pretty good chance that when it comes to Gas Town, I simply don’t get it. But, if I do correctly understand it, I think Gas Town represents peak scaffolding. It will get simpler from here. Claude Code already has its own task lists and plans. Boris’s solution to constant permission prompts is simply to pre-allow a list of safe actions. No majors, no polecats, no three-level loops. Indeed, Anthropic is widely rumored to already be working on built-in orchestration tools that would make this whole thing obsolete.

Don’t worry too much about being behind

A serious update every 3-6 months is ok. Commit to learning everything that’s going on every now and then instead of trying to constantly stay on top. Constantly monitoring AI news is absolutely exhausting, and a lot of stuff ends up being ephemeral (e.g. Beads was short-lived, Claude Code subsumes a lot, model quality makes scaffolding obsolete). A week ago I had no idea anymore what was going on in Agentic coding and now I’m running multiple agents in parallel. Considering my eyes glazing over when I tried to read the Gastown post, I can assure you, this is not because I’m a genius. It’s just that catching up doesn't require months. It’s still just prompts and tool calls. Having said that, things have genuinely shifted. The models are better, Claude Code has matured, and the scaffolding concepts have settled. If you've been putting off a catch-up, now is a good time.

Gas Town (GAS) is a Solana-based token linked to a tool created by Steve Yegge for managing multiple AI coding agents, such as Claude Code instances, acting like a factory floor supervisor for them.[1] The token’s associated liquidity pool directs 99% of fees to Steve Yegge and 1% to the AMM.[1] It’s trading on DEXes like Meteora, with a price around $0.001165 USD, some liquidity, and decent volume. Looks like a memecoin-style setup, but with a utility angle linked to Yegge’s project.