What I think AI will mean for society

Yup I did my own essay to follow Sam Altman and Dario Amodei. It is not as long, and a bit more nuanced.

Developments in AI have been a recurring theme in my newsletter. I’m quite deeply involved in generative AI to be honest, which feels weird to write. But I am an avid follower of the space, reading more than I probably should, trying every tool, trying to push the boundaries by jailbreaking Meta AI in Whatsapp.

More seriously, I’m deploying Generative AI at scale in my job as CX Director at Carousell, and using it to learn to code and create a side project. Just last week, a founder friend called me to ask how he could do some things with Gen AI, and I explained him how exactly custom GPTs work and how that applies to his use case. By now, I’m helping out 3-4 AI startups with intros to VCs and jamming sessions on their products and plans. It feels like for the first time in my life I’m actually an early adopter of a transformative new technology. It’s exciting!

The discourse online is polarizing with boosters talking up how AI is going to change everything, and skeptics claiming it’s not going to change anything. The truth is more nuanced, and not as complicated as people make it out to be. There is also just a lot we don’t know, so it’s similar to talking about the internet in 1995, but there is also more and more we do know. So for a change, I am going to write about nothing but AI, and in particular about it’s societal impact. You can see this as a round-up, but with a lot more synthesis work done by me to explain what it all means. There is a short glossary of AI terms in this footnote1

There is no question that GPTs, even at their current level of performance, are a transformative new technology

GPTs are a broad spectrum productivity booster, akin to the steam engine, electricity, the PC and the Internet. In my team, we have been able to handle a +40% increase in support volume, while improving just about every KPI, and spending materially less than budgeted by slowing our hiring pace. In information handling fields as diverse as marketing, programming, legal work, accounting, and science, productivity gains of 20-60% are already being unlocked.

All big companies have a lot of ‘Secret Cyborgs’.

wrote a series of papers about AI usage, and he coined the term Secret Cyborg for employees who are secretly using ChatGPT to be more productive.From the surveys, and many conversations, I know that people are experimenting with AI and finding it very useful. But they aren’t sharing their results with their employers. Instead, almost every organization is completely infiltrated with Secret Cyborgs, people using AI work but not telling you about it.

Why does this happen? Pretty simple and glaringly obvious once you read the below.

Here are a bunch of common reasons people don’t share their AI experiments inside organizations:

They received a scary talk about how improper AI use might be punished. Maybe the talk was vague on what improper use was. Maybe they don’t even want to ask. They don’t want to be punished, so they hide their use.

They are being treated as heroes at work for their sensitive emails and rapid coding ability. They suspect if they tell anyone it is AI, people will respect them less, so they hide their use.

They know that companies see productivity gains as an opportunity for cost cutting. They suspect that they or their colleagues will be fired if the company realizes that AI does some of their job, so they hide their use.

They suspect that if they reveal their AI use, even if they aren’t punished, they won’t be rewarded. They aren’t going to give away what they know for free, so they hide their use.

They know that even if companies don’t cut costs and reward their use, any productivity gains will just become an expectation that more work will get done, so they hide their use.

They are incentivized to show people their approaches, but they have no way of sharing how they use AI, so they hide their use.

We can expect a growing divide between senior management and employees in understanding of how long it takes to do things. That has parallels in the PC era too. In 2014 I had a Managing Director who typed with two fingers. He also had no idea how much effort any financial model took to build. This sometimes worked in my advantage, and sometimes…not so much.

So conclusion here, in case there was any doubt, Generative AI really is a transformative technological shift. There is no need to buy into the hyperbole AGI stuff to try on this point of view. If you are planning to keep working (or living) for a while, it makes sense to adopt AI asap in the same way it made sense to adopt PCs in the late 80s and internet in the mid 90s.

If you do adopt it, you’ll quickly start getting a feel for the limitations

Impressive results and real gains notwithstanding, there is also a valid narrative of slow adoption and failing implementations. in the enterprise it seems like not much more is happening so far beyond Proof of Concepts (PoCs). McDonalds cancelled their trial with an automated drive-through system because it made too many mistakes, like this order of one Caramel Sundae without Caramel, four ketchup packets and three butter, or one order where the bot mistakenly added $220 of chicken nuggets.

There is good reason for skepticism, and all of that talk about AGI is a distracting overpromise (at least, that’s what I think). Automating things with software is HARD, as Steven Sinofksy (from Microsoft Office and Windows 7 fame) wrote recently.

There’s a deep reality about any process—human or automated—that few seem to acknowledge. Most all automations are not defined by the standard case or the typical inputs but by exceptions. It is exceptions that drive all the complexity. Exceptions make for all the insanely difficult to understand rules. It is exceptions that make automations difficult, not the routine.

Unfortunately, AI so far struggles very much with exceptions. That’s what killed the McDonalds trial, and it goes beyond Generative AI to things like self-driving cars. A hilarious recent example the complaints from neighbours that every morning at 4am, self-driving Waymo cars would create a traffic jam in their parking lot and start honking at each other. The argument from self-driving maximalists that all problems with autonomous cars would be solved if only we made ALL cars autonomous was loudly falsified by dumb-ass Waymo cars in parking lots across California.

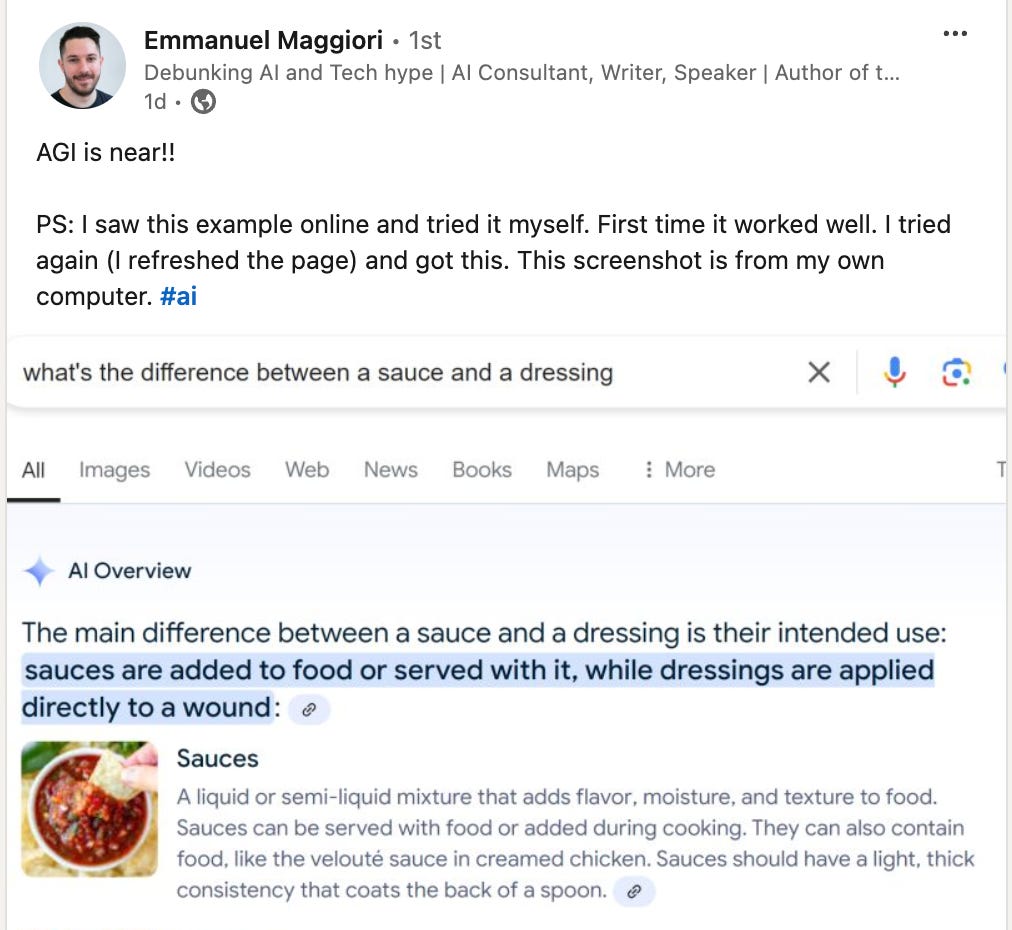

These systems get the right answer about 90% of the time, which is cool, but it’s not enough to do pretty much anything without human supervision. It’s a fact that Generative AI models are probabilistic. They don’t know what they are doing, they just calculate what the likeliest next word should be. From a technological perspective, it looks extremely unlikely that Generative Pre-trained Transformer models are enough to get anywhere near AGI. Likely, completely novel, uninvented technological breakthroughs are needed to complement the GPTs. My favorite person to explain the technical nuances of this by far is Emmanuel Maggiori, who wrote a couple of excellent books like “Smart until it’s dumb”.

A lot of his content on LinkedIn are these kinds of funny fails, of which there are plenty. But his books are a lot more nuanced. It is he who articulately, and from a position of deep expertise in Machine Learning, makes the case that self-driving cars are unlikely to be safe any time soon, for fundamental reasons about the way machine learning works. Paraphrasing an illustrative example I’m pretty sure I read in his book: As a human driver, you use information from your non-driving life to make better decisions while driving a car. If an obstacle emerged in front of you, you’d act differently in case it was an umbrella than in case it’s a horse. This is based on your general experience with horses and umbrellas. You know the relative implications of slamming into one versus the other. But a self-driving system would have to be trained on every single conceivable case.

We experience the pitfalls of Tesla Vision several times during an hour-long drive. Once, the car tries to steer us into plastic bollard posts it apparently can’t see. At another moment, driving through a residential neighborhood, it nearly rams a recycling bin out for collection — I note that no approximate shape even appears on the screen.

If you haven’t read my own reflections on coding with AI (as a newbie developer), I detailed the limitations here, as well as the positives. The reality is quite simple. Transformative productivity gains, but not full automation. We can formulate the following two litmus tests for AI content on the internet:

Those claiming that AI is completely useless, probably haven’t really tried using it.

Those claiming it can replace or automate entire departments, probably haven’t really tried using it.

It’s highly unlikely that AI is going to create a crisis of mass unemployment

If we continue to considerAI a broad revolution of a similar nature as the steam engine, electricity or the PC, we could look at what happened in those revolutions. The argument that triggers people, like the striking dockworkers in the US, is that automation will reduce the number of things for humans to do. Therefore, it should reduce the number of available jobs, and to add insult to injury, due to supply/demand it would then also reduce pay for those few remaining jobs. In fact, people were very afraid this would happen during the industrial revolution in the 19th century.

But that didn’t actually happen at all. Steam engines did obliterate many jobs in factories, as cars did later too to almost anyone working with horses, not to mention the horses themselves. But these technologies helped create a huge number of new jobs. Far, far more, in fact than they replaced. The same pattern plays out with every transformative technology. Here is a list of things that technology can do:

AI could replace human jobs. This is the one everyone tends to focus on. Acemoglu singles out “simple writing, translation and classification tasks as well as somewhat more complex tasks related to customer service and information provision” as candidates for job elimination.

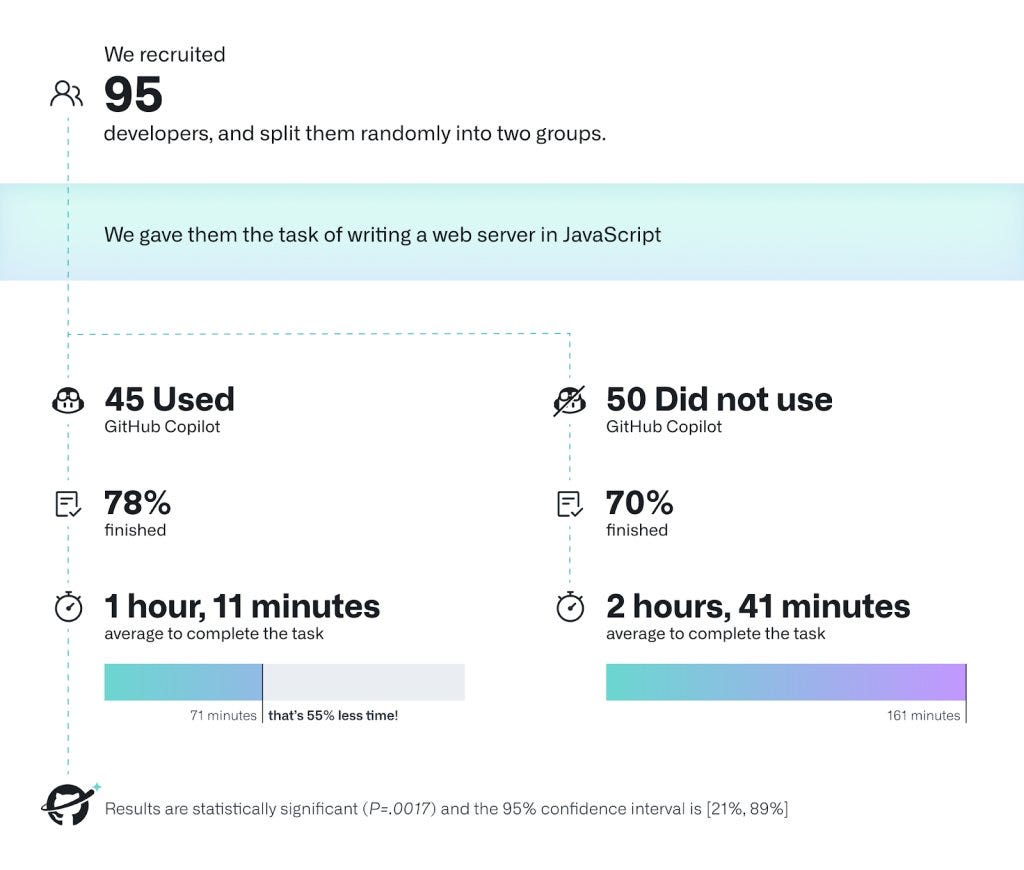

AI could make humans more productive at their current jobs. For example, GitHub Copilot helps people code. This might either create or destroy jobs, depending on demand.2

AI could improve existing automation. Acemoglu suggests examples like “IT security, automated control of inventories, and better automated quality control.” This would raise productivity without taking away jobs (since those tasks are already automated).

AI can create new tasks for humans to do. In a policy memo with David Autor3 and Simon Johnson, Acemoglu speculates on what some of these might be. They suggest that with the aid of AI, teachers could teach more subjects, and doctors could treat more health problems. They also suggest that “modern craft workers” could use AI to make a bunch of cool products, do a bunch of maintenance tasks, and so on. (As I’ll discuss later, it’s actually very hard to imagine what new tasks a technology might create, which is one big problem with discussions about new technologies.)

Noah Smith who paraphrases this list from an Acemoglu paper

The last note is interesting. If we knew everything a technology could do, we would be doing the good things already while avoiding the bad things. It’s not that simple. We need time + capital + talent to build, launch, and iterate our way to figure this out. In any case, a lot of people, including Acemoglu focus very much on point 1 and 2 from the list, while assuming without any good reason that 3 and 4 just simply won’t happen. It’s almost as if the Nobel prize winning author on inequality had a conclusion in mind before starting the paper….

Now, there could be some reason why AI is different than the technological leaps that came before, but what would that be? It looks most likely that a mix of things happen spanning all four categories from the list above.

You might be thinking: “you don’t get it Gijs, Super AI will be better than humans at everything. We’re all going to be automated away!” Well, even if AI and robots do become better than humans at every possible task, I think we will still have jobs. The reason is an economics concept called Comparative Advantage. Noahpinion explains it here, this is the key example:

Imagine a venture capitalist (let’s call him “Marc”) who is an almost inhumanly fast typist. He’ll still hire a secretary to draft letters for him, though, because even if that secretary is a slower typist than him, Marc can generate more value using his time to do something other than drafting letters. So he ends up paying someone else to do something that he’s actually better at.

The constraint here is that there is only one Marc, so even though he is better than his typist at everything, he still has to hire a typist, otherwise he gets stuck at some point. Does AI have any such constraints? Energy is one. It doesn’t matter how much electricity we generate, and how many GPUs we produce, it will always be a finite amount. Comparative Advantage is a subtle concept, so let me quote one more explanation, this one from Dario Amodei, the CEO of Anthropic:

First of all, in the short term I agree with arguments that comparative advantage will continue to keep humans relevant and in fact increase their productivity, and may even in some ways level the playing field between humans. As long as AI is only better at 90% of a given job, the other 10% will cause humans to become highly leveraged, increasing compensation and in fact creating a bunch of new human jobs complementing and amplifying what AI is good at, such that the “10%” expands to continue to employ almost everyone. In fact, even if AI can do 100% of things better than humans, but it remains inefficient or expensive at some tasks, or if the resource inputs to humans and AI’s are meaningfully different, then the logic of comparative advantage continues to apply. One area humans are likely to maintain a relative (or even absolute) advantage for a significant time is the physical world.

Apart from energy, compute, and physical constraints (shitty robots), the other major constraint on AI is probably data. Have you ever had to download a CSV file from somewhere, and then reupload it somewhere else? Or struggled to do something because the data was not being captured, or was just low quality? AI won’t magically make these constraints go away.

Having said all that, I do believe the long-term picture looks more muddy, especially if we do bring AGI back into the picture. So far we still assume that humans control AI. What if AI indeed rebels and tries to take control over its key inputs; energy and compute. It could cut humans off energy to get more for itself, or turn humans into batteries, Matrix style. This kind of stuff is not that far-fetched, and that’s why I do believe alignment (safety) is important.

What could happen in some great scenarios

Sam Altman (OpenAI) and Dario Amodei (Anthropic) both wrote hyper positive essays about the future. Sam Altman wrote a fairly short note titled ‘The Intelligence Age’, which consists almost entirely of hyperbole, and doesn’t contain much real content.

AI models will soon serve as autonomous personal assistants who carry out specific tasks on our behalf like coordinating medical care on your behalf. At some point further down the road, AI systems are going to get so good that they help us make better next-generation systems and make scientific progress across the board.

This feels so devoid of substance to me that it almost makes me reconsider the rumours that Sam Altman himself is an LLM.

Dario Amodei followed up with his own essay. Unfortunately, not all of it is as tight and succinct as his explanation of comparative advantage that I quoted above. This essay is clocking in at a whopping 15,000 words. But thankfully, it does contain at least a couple of pretty interesting nuggets. At first, Dario goes into some constraints, including the one I mentioned on data (energy and computer being notably absent). He also very clearly explains the human constraint:

Constraints from humans. Many things cannot be done without breaking laws, harming humans, or messing up society. An aligned AI would not want to do these things (and if we have an unaligned AI, we’re back to talking about risks). Many human societal structures are inefficient or even actively harmful, but are hard to change while respecting constraints like legal requirements on clinical trials, people’s willingness to change their habits, or the behavior of governments. Examples of advances that work well in a technical sense, but whose impact has been substantially reduced by regulations or misplaced fears, include nuclear power, supersonic flight, and even elevators.

But enough about that, let’s get into some cool ideas.

In Biology and Medicine (actually the field of my Bachelor, and Dario’s too!) he makes a set of observations that struck me. On the one hand, serial dependence (you have to invent fire before you can invent spare ribs), human constraints (laws, regulations) and practical constraints (experiments take time) put a speed limit on the rate of new scientific breakthroughs. But on the other hand, big discoveries happen somewhat randomly. The existence of CRISPR as a natural component of bacteria was known since the 80s, but it took another 25 years until it occured to someone that it could be used to edit genes in live animals. There really is no technological reason why CRISPR couldn’t have been invented at least a decade earlier. With AI, it is likely that those kinds of discoveries can be pulled forward.

To summarize the above, my basic prediction is that AI-enabled biology and medicine will allow us to compress the progress that human biologists would have achieved over the next 50-100 years into 5-10 years. I’ll refer to this as the “compressed 21st century”: the idea that after powerful AI is developed, we will in a few years make all the progress in biology and medicine that we would have made in the whole 21st century.

I like this a lot, although the 5 years sounds hopelessly optimistic to me. In the real world, professors are desperately trying to find ways to detect or prevent GPT usage among students, instead of deploying it at scale to accelerate science! But this does conceptually make sense, and if true, would be unequivocally awesome! Dario proceeds to list his ideas of what specific inventions might be made, but I’ll skip that because that’s just, like, his opinion man.

Next, on how brains work:

There is one thing we should add to this basic picture, which is that some of the things we’ve learned (or are learning) about AI itself in the last few years are likely to help advance neuroscience, even if it continues to be done only by humans. Interpretability is an obvious example: although biological neurons superficially operate in a completely different manner from artificial neurons ([…..]), the basic question of “how do distributed, trained networks of simple units that perform combined linear/non-linear operations work together to perform important computations” is the same, and I strongly suspect the details of individual neuron communication will be abstracted away in most of the interesting questions about computation and circuits22. As just one example of this, a computational mechanism discovered by interpretability researchers in AI systems was recently rediscovered in the brains of mice.

Translation: We don’t know what really happens inside an AI Model, but for many good reasons smart people are trying their best to find out. This is called interpretability research. Apparently, and this was news to me, artificial neural nets and actual brains have evolved similar strategies to achieve similar goals. Mice and AI vision models have similar computation methods to process images. That’s cool!

Apart from these snippets, the essay reaches far. Increasing human lifespan by 50% by eliminating most cancers, eliminating all genetic diseases, solving food security and climate change. I do believe these are all going to be possible in the next 100 years, but not within the next 10. A mix of human, data, and AI constraints will slow us down. But the bottom-line, which I do definitely buy into is that: AI offers credible paths to improve a lot of meaningful things by a lot, and that is more than enough reason to build it.

Bonus: OpenAI’s valuation is not as crazy as it seems

OpenAI just raised $6.6Bn in funding at a $160Bn valuation, in what’s touted as the largest Venture Capital round in history. It was ridiculed in advance, but that has died down as they pulled off the round. Notably, they avoided directly raising from the Saudis, a good sign. Via this report from The Information we also got some new numbers about the company. OpenAI expects to turn profitable in 2029, at $100Bn revenue, requiring a total of $44Bn in cumulative funding to get there. If we see their role as one of the leading providers in a transformative new cloud service, their position should be similar to AWS or Microsoft Azure in Cloud. Azure did $70Bn last year and AWS did $99Bn. Since OpenAI also has a meaningful end-user business via ChatGPT, which those cloud players do not, the $100Bn does not seem that crazy. If we assume that they have a sustainable competitive edge in foundational models, that means the valuation could even be a pretty good deal. But so far it looks as if these models are converging on a certain performance ceiling. On the other hand, Strawberry is certainly interesting. It does feel as though OpenAI continues to be around 6 months ahead of everyone else. A lot will hinge on GPT5.

The nature of this investment ‘bubble’ makes it not that bubbley. All major asset bubbles in history have shared a component of retail participation. During the tulip mania of the 1630s in Holland, everyone was in on it. At some point, a tulip bulb was worth more than a house. Definitely bigger than NFTs. In the dotcom boom of the late 90s, all of those internet companies went public. Retail investors got in BIG TIME. Many regular people lost tons of money. The same happened in the housing bubble that led up to the 2008 crisis. This is not happening right now. All of that crazy investment in OpenAI, Anthropic, etc is done by institutional investors. In the case of this OpenAI round, Microsoft and Nvidia invested. Google, Meta and Amazon are also pouring billions into AI via various constructions. Those companies all have money to burn anyway!! If, or when, this bubble collapses, no retail investors are going to get hurt. Besides, inflation is back under control, the US economy is crushing it right now in every other respect, so it’s probably fine.

That was today’s roundup, in essay form. Had to get some AI stuff out of my system after those self-indulgent life stories.

GPT (Generative Pretrained Transformer) model, also “Gen AI”: This is the category of AI models that contains Large Language Models (LLM), and also Generative image, video, audio, etc models (which are not LLMs as they are not language).

ML (Machine Learning): The fundamental method of giving a neural network tons of data, from which it learns to recognize patterns or generate new patterns. This covers also things like self-driving cars. GPTs are contained within the broader ML space.

AI: I’ll just use this in a general sense, like everyone does. It will usually refer to GPTs nowadays, but also to other ML based models, or even future models that use different technologies.